Papers

|

Training AI to recognize distances, objects, and requests of interest to the blind community

IEEE Conference Proceedings and PubMed, 2023

Tharangini Sankarnarayanan, Lev Paciorkowski, Khevna Parikh, Giles Hamilton-Fletcher, Diwei Sheng, Chen Feng, Todd Hudson, John-Ross Rizzo, Kevin C. Chan

I'll be presenting the work on behalf of my team at the 45th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE EMBC Sydney) in July 2023. Under the supervision of Kevin C. Chan, the project focuses on training an object detection model to recognize items relevant to blind people in their daily lives. The pipeline begins with the identification of thirty-five objects of interest to the blind community via surveys, questionnaires, and Microsoft ORBIT research. The pipeline involves training a 'You Only Look Once' (YOLO) bounding box object detection model on a resampled version of the publicly available Google Open Images V6 dataset. For end users, the model incorporates portable assistive technologies.

|

|

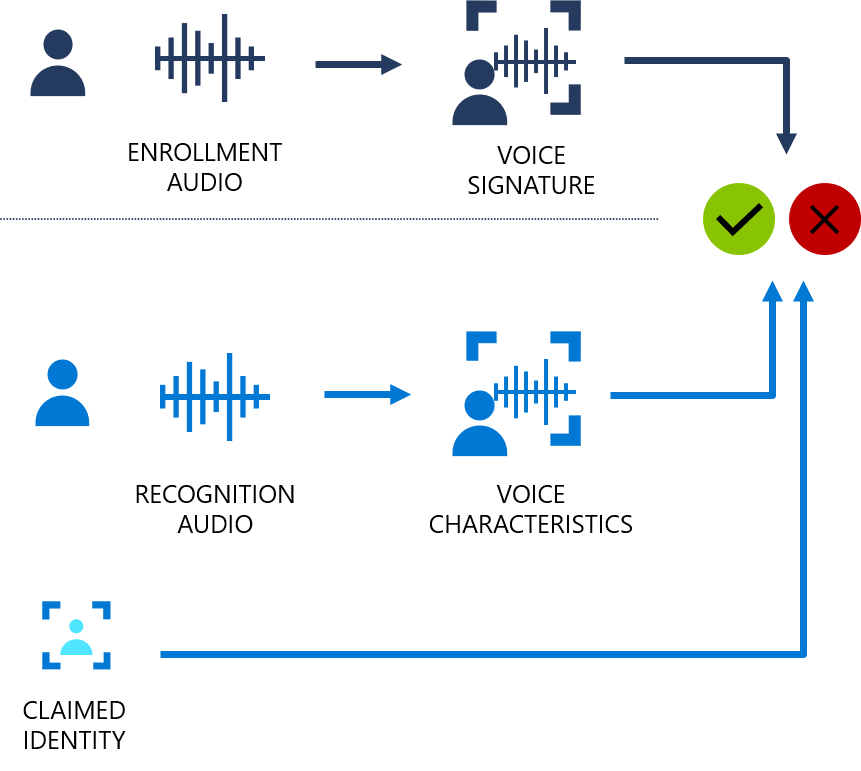

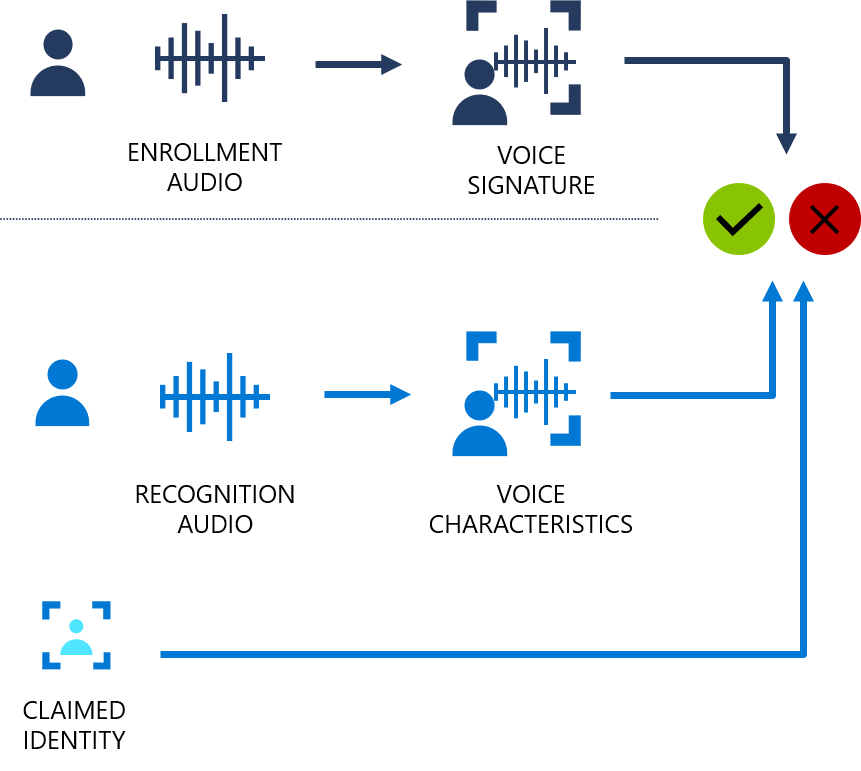

Convolution Neural Network and Gated Recurrent Units based Speaker Recognition of Whispered Speech

AIP ICMAT Conference Proceedings

Sangeetha J, Tharangini Narayanan, Rekha D, Umamaheshwari P

Presented at the International Conference on Mathematics and its Applications in Technology ICMAT 2023. Under the supervision of J Sangeetha, we analyzed different models for the speaker recognition task, focusing specifically on whispered speech. We started by exploring feature extraction methods such as Mel Frequency Cepstral Coefficients (MFCC) and Linear Predictive Coefficients (LPC). We then evaluated various machine learning and deep learning models including Support Vector Machine (SVM), k-Nearest Neighbor (k-NN), Extra Trees, Random Forest, Gaussian Mixture Model (GMM), Convolutional Neural Network (CNN), and Gated Recurrent Unit (GRU). Each model's performance was measured on the CHAIN Whispered Speech Corpus and evaluated using metrics such as precision, recall, F1 score, and accuracy. Our results suggested that the GRU model performed best, followed closely by the 2D-CNN and GMM with MFCC. This research serves as a significant contribution to the field of speaker recognition, particularly for whispered speech, with potential applications in security and biometrics.

|

|

Realistic Face Rendering for 3D Mixed Reality Experience

FIB - Facultat d'Informàtica de Barcelona, 2019, pdf

Tharangini Sankarnarayanan, Josep Ramon Morros,Javier Ruiz Hidalgo, Nuria Castell Arino

I designed and conceptualized the prototype of a deep learning pipeline for time-series analysis of video communication data to perform an in-depth research project. Devised a bounding box to detect the Virtual Reality headsets in the video captures and creates a realistic model of the user’s face and use it to replace the part covered by the headset, enabling mixed reality experience. The accuracy of the resulting prototype was 79%. The project was advised by Josep Ramon Morros, Javier Ruiz Hidalgo and Núria Castell Ariño.

|

Projects

|

Language Generation, Modeling and Bias Evaluation on BOLD Dataset

(2022-12-24)

Supervised by Tal Linzen. The project evaluates the potential of Language Models (LMs) to generate potentially dangerous biases resulting from stereotypes that spread hostile generalizations. Text is generated using prompts of the Bias in the Open-Ended Language Generation Dataset (BOLD). Evaluation metrics of toxicity, sentiment, and regard are implemented on the generated texts to evaluate the implicit bias in pre-trained language models. The project researches the different degrees of biases across social groups in society between GPT-2, CTRL, XL-Net, GPT-Neo, and OPT.

|

|

Job Board for Incarcerators

Marron Institute of Urban Management: Litmus Project

(2022-08-28)

I designed a tool under the supervision of Angela Hawken to connect employers committed to hiring people with criminal records with qualified applicants who are releasing from the Illinois Department of Corrections, collaborating with a group of 10 end users to create features across the software using Python, Flask, and CSS as part of the Litmus Project. The prototype of the tool was presented to incarcerators at Kewanee Life Skills Re-entry Center and the Director of Illinois Department of Corrections and the tool will be launched in August 2023.

|

|

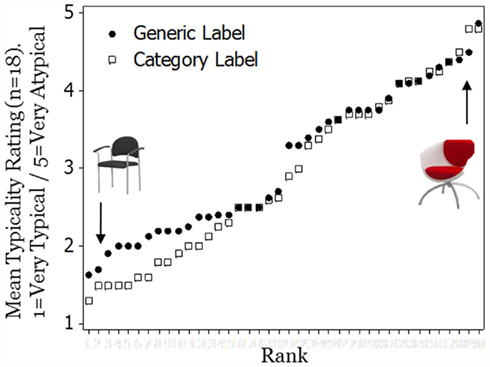

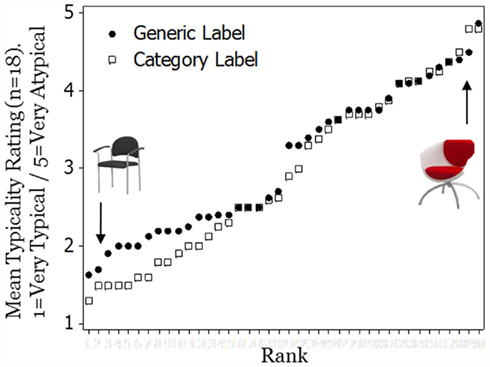

Comparing Typicality Ratings between Human and Model Representations for Images

DS-GA 1009: Computational Cognitive Modelling

(2022-05-10)

Supervised by Brendan Lake, the project rrevolves around an extension to compare the typicality ratings of each image of the domain by Humans, Convolutional Networks, and Vision Transformers. The domains under consideration were rose, mushroom, dinosaur, fish and couch. I experimented with Convolutional Networks of GoogleNet and ResNet and Visual Transformers. Visual Transformers outperform Convolutional Networks in typicality prediction and model the human mind in 3 out of the 5 domains using PyTorch

|